TL;DR

We’ve solved the reliability challenges with our Kubernetes-based container builds by moving to ephemeral, fully-isolated BuildKit instances - and we’ve packaged our solution into an open-source Kubernetes operator called buildkit-operator! In this post, we’ll revisit the pain points from our previous architecture, walk through our new design, highlight the results, and share how you can use it too.

Recap: The Original Problem

In our previous post on building containers with BuildKit in Kubernetes, we shared how moving off EC2 and onto Kubernetes gave us faster builds and a simpler operational model, but also introduced new challenges.

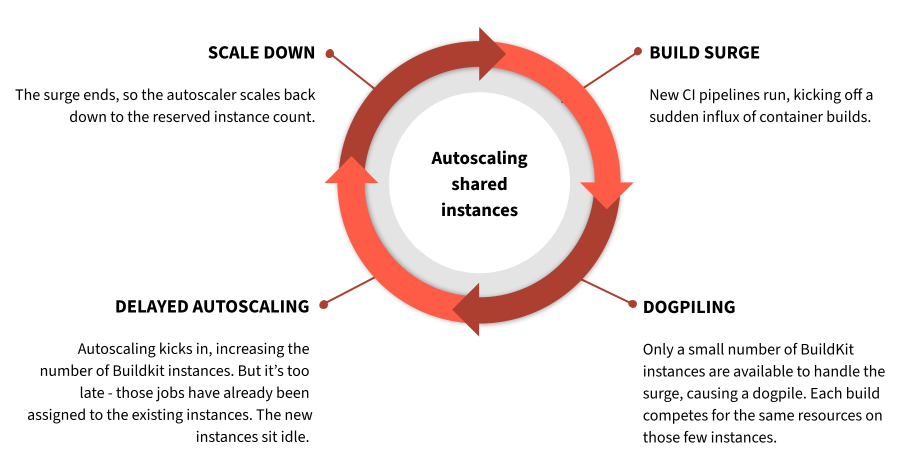

While BuildKit itself worked well enough, our shared, long-lived BuildKit deployments created issues:

- Unpredictable Failures – Up to 4% of builds failed for reasons outside the engineer’s control: cryptic errors, jobs freezing mid-build, or builds getting killed mid-execution.

- Resource Contention – Multiple CI jobs could land on the same builder, leading to “noisy neighbor” slowdowns or crashes.

- Aging Instances – Long-lived builders could get “wedged” into bad states, only fixed by manual restarts.

- Autoscaling Limits – We struggled to scale builders quickly and accurately, leading to either bottlenecks or wasted resources.

- Limited Observability – We couldn’t easily identify which CI jobs landed on which builder instance (for debugging).

This led to flaky pipelines, slower deploys, and frustrated engineers who needed frequent support. And the problems only worsened as teams leaned further into automation and AI to iterate faster.

Ephemeral BuildKit via an Operator

From the start, we knew our ideal future state: each CI job should get its own dedicated BuildKit instance, created on-demand and torn down automatically. No resource sharing, no manual cleanup, no lingering bad state.

Why an Operator?

We considered a few approaches first - better autoscaling, a custom pool manager, even Docker’s Kubernetes driver - but each had significant tradeoffs that wouldn’t work for us:

- Better Autoscaling: Doesn’t solve the noisy neighbor problem or guarantee isolation.

- Custom Pool Manager: Managing pools of builders requires extra complexity, and we’d have to build all of that (including the API, lifecycle management, and cleanup) ourselves.

- Docker Kubernetes Driver: Requires permissions to launch arbitrary pods; no way to guarantee cleanup if the CI job fails or is canceled.

We ultimately landed on building a custom Kubernetes operator because it let us:

- Avoid Overly Broad Permissions – CI jobs don’t need rights to launch arbitrary pods, just the ability to create

BuildkitCRs. - Guarantee Cleanup – Using Kubernetes

ownerReferences, each BuildKit pod is automatically deleted when the owning CI job terminates for any reason. - Enforce Defaults & Policies – The operator can inject the right configuration (including our pre-stop script for graceful shutdowns!) into every instance without CI pipelines having to manage it.

Here’s a simplified version of what a CI job creates:

1 2 3 4 5 6 7 8 9 10 | |

The operator watches for these resources, spins up a pod based on the specified template, and updates the resource’s status.endpoint so the CI job can connect. When the CI job ends, the ephemeral BuildKit pod disappears automatically.

Solving Our Key Problems

-

Isolation by Design Every job gets its own BuildKit pod (per CPU architecture) - no shared state, no noisy neighbors.

-

Automatic Teardown

ownerReferencesensure BuildKit pods are always cleaned up when jobs finish. -

Graceful Shutdowns Our pre-stop hook is automatically injected into the BuildKit container, so builds finish cleanly even during pod termination events.

-

Security Controls CI jobs can only request a BuildKit instance via CRDs - no blanket permissions to deploy arbitrary, long-lived workloads to the cluster.

-

Observability The operator can annotate BuildKit pods with pipeline metadata, giving us better traceability for debugging and cost attribution.

Results

Since rolling out the operator, we’ve seen significant improvements:

- Higher Reliability – Over 99.97% of builds complete successfully without unexpected failures.

- Faster Builds – No contention means more predictable performance - even when accounting for the overhead of creating new pods on-the-fly.

- Fewer Wasted Resources – BuildKit pods are only running when needed, and are tuned to the resource requirements of the build, thus reducing idle resource costs.

- Happier Engineers – Fewer support requests and less time spent on flaky builds.

Try It Yourself

We’ve open-sourced our buildkit-operator so other teams can benefit from our approach. You can install it into your own cluster via our Helm chart, define your templates, and start provisioning ephemeral BuildKit instances for your CI workloads in minutes!

Full documentation and examples are available in the GitHub repo.

Final Thoughts

This journey started with the simple desire for reliable container builds in CI - and ended with a lightweight, reusable operator that solves the problem for us and (hopefully) for you too.

If your team is struggling with BuildKit reliability on Kubernetes, give our operator a try. We’d love to hear how it works for you and what ideas you have for making it even better.