When we started building our mobile checkout experience on Android, we wanted to ensure our mobile data entry experience was as smooth as possible. At its core, checkout is a painful experience riddled with text entry fields, lost data and obscure errors. There have been many great posts about optimizing the checkout experience for mobile that we drew inspiration from, each with their own set of best practices, tips and tricks. We tried our best to distill that pool of information into a few key user stories to focus on:

I do not want to enter data that you should be able to infer

I do not want to type the same information more than once

You should already know where I am when I need to enter my address

Using recommendations, best practices and imitating the best checkout forms we saw, we built what we think is a great component to any checkout form: an address autocompletion view. Today, we’re making it open source: PlacesAutocompleteTextView

Text entry on mobile

Mobile devices have many strategies for simplifying text entry; autocorrection, input suggestions, and swipeable keyboards all do wonders to simplify the user experience. Despite all that, it remains true that physically typing text is challenging: digital keyboards generally have smaller keys, users do not hold the phone in both hands all the time and autocorrect can only correct so well.

The text entry problem is then exacerbated by needing to type postal addresses for billing and shipping information. Addresses often switch between text and numeric input, have pronouns not in autocorrect dictionaries and are often broken up into several different input fields that the user has to navigate.

We looked at how other sites and apps were limiting the amount of data that a user needed to enter to complete a checkout form and saw that an autocompleting address entry field was far above the rest in terms of reducing keystrokes.

PlacesAutocompleteTextView

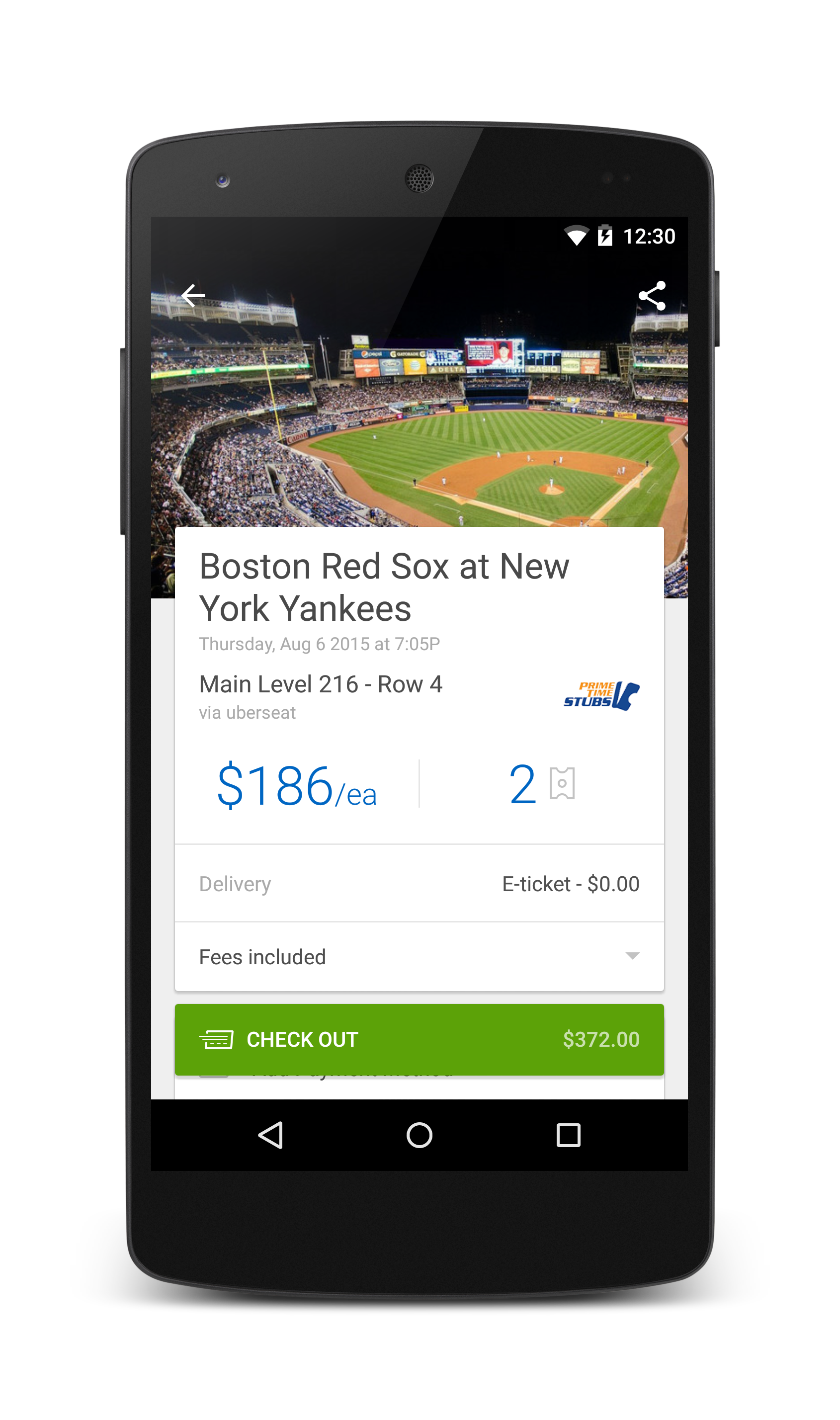

PlacesAutocompleteTextView is an extension of the Android framework AutocompleteTextView that interacts with the Google Maps Places Autocomplete API to suggest addresses as soon as a user starts typing. Here’s a demo of the view in action in the checkout form of the SeatGeek for Android app:

By autocompleting addresses as a user enters them, we’re already pretty far along the path to achieving the goal of our first story: not entering data that you should be able to infer. But let’s take a deep dive into a few of the unique features that take the PlacesAutocompleteTextView experience to the next level.

Place history

Repeating yourself is the worst. Sure there are probably more frustrating things than repeating yourself, but in the context of checkout forms having to type the same address more than once is ludicrous, even if there’s full autocompletion for it. Fortunately, PlacesAutocompleteTextView maintains a full history of selected results from the Places API so that entering past results is a breeze.

Normally, the threshold for queuing the Places API to get results is 3 characters entered, but if the user has already previously selected a Place from the popup, the PlacesAutocompleteTextView will suggest past results. By default, it will save up to 5 places and offer them as suggestions as soon as the user starts typing. This history is stored in a file on the filesystem, so previously entered addresses will be remembered across sessions as well.

Remembering the locations that the user has previously selected, the PlacesAutocompleteTextView is checking the box next to our second user story, “I do not want to type the same information more than once”.

Location biasing

154 million. That’s the number of postal addresses in the United States [source]. That’s a lot. When the user starts typing their address, they’re reducing the set of potential addresses significantly, but not nearly enough to provide meaningful results. How can we ensure that the most relevant addresses are surfaced to the user? There are probably a few good heuristics for this problem, but by default the PlacedAutocompleteTextView biases the place results to the location of the user. So if you’re in New York at the SeatGeek office, “235 Park” will complete with 235 Park Avenue South, New York, NY.

Location biasing can be done two ways. By default the Google Maps API will bias autocomplete results based on the IP of the device. This is a reasonably good method for biasing, but IP address lookup might not be more accurate than a few miles and lookup can break completely when used in conjunction with Proxies/VPN’s/etc. Fortunately, most Android devices provide an easy (and more accurate) mechanism for determining location: GPS.

The PlaceAutocompleteTextView exposes an API for biasing location results to an Android Location returned from the Android location APIs. This allows for highly accurate biasing of location results and ensures that the most relevant addresses are at the top of the results list. This nails the user expectations outlined in the third user story, “you should already know where I am when I need to enter my address”.

What about Google Play Services’ Places API?

Google recently launched Place APIs as part of Google Play Services. They provide a pretty clean and similar API to accessing the Places Autocompletion API as our library does, but there are two primary reasons we haven’t migrated to using it yet:

- It’s still impossible to fetch the full Place Details from the Google Play Services implementation, without which you cannot get the full breakdown of address components, just a human readable address string

- Our implementation handles binding the data to UI components to accelerate the development process by not having to implement all the UI yourself. With Google Play Services, you would still need to create your own FilterAdapter, AutocompleteTextView, etc.

Wrap up

By combining Google’s Places Autocomplete API with the power of Android’s local storage and location features, we’re able to provide a great and painless address entry experience in our checkout flow. We’re also able to meet all of our goals of minimizing the character entry count, remembering previously entered addresses and suggesting the best addresses with the highest degree of relevancy to our users.

PlacesAutocompleteTextView is open source today and available on maven, so add it to your checkout flow and improve the form experience for your users tomorrow.

– SeatGeek Android Team

P.S. If a UX-driven product development process like ours is something that appeals to you, we’re hiring.

.png)

.png)

Before

Before

After

After

Satellite image

Satellite image

Before

Before

After

After

Satellite image

Satellite image